6 Prompt Techniques

(source: @ChrisLaubAI)

Top engineers at OpenAI, Anthropic, and Google don’t prompt like you do.

They use 6 techniques that turn mediocre outputs into production-grade results.

Technique 1: Constraint-Based Prompting

Most prompts are too open-ended. Engineers add hard constraints that force the model into a narrower solution space, eliminating 80% of bad outputs before they happen.

Template:

Generate [output] with these non-negotiable constraints:

- Must include: [requirement 1], [requirement 2]

- Must avoid: [restriction 1], [restriction 2]

- Format: [exact structure]

- Length: [specific range]Example:

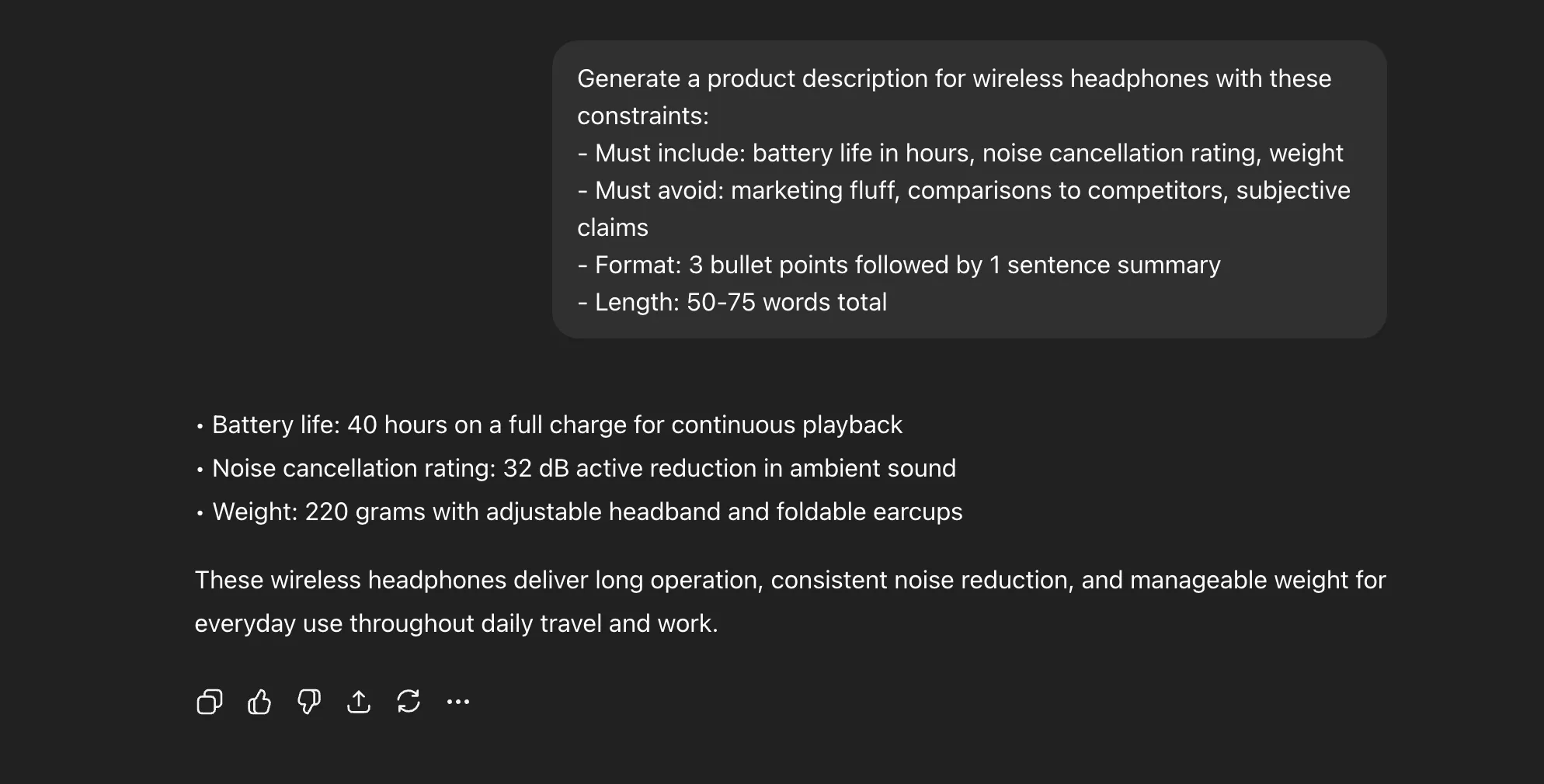

Generate a product description for wireless headphones with these constraints:

- Must include: battery life in hours, noise cancellation rating, weight

- Must avoid: marketing fluff, comparisons to competitors, subjective claims

- Format: 3 bullet points followed by 1 sentence summary

- Length: 50-75 words total

Technique 2: Multi-Shot with Failure Cases

Everyone uses examples. Engineers show the model what NOT to do. This creates boundaries that few-shot alone can’t establish.

Template:

Task: [what you want]

Good example:

[correct output]

Bad example:

[incorrect output]

Reason it fails: [specific explanation]

Now do this: [your actual request]Example:

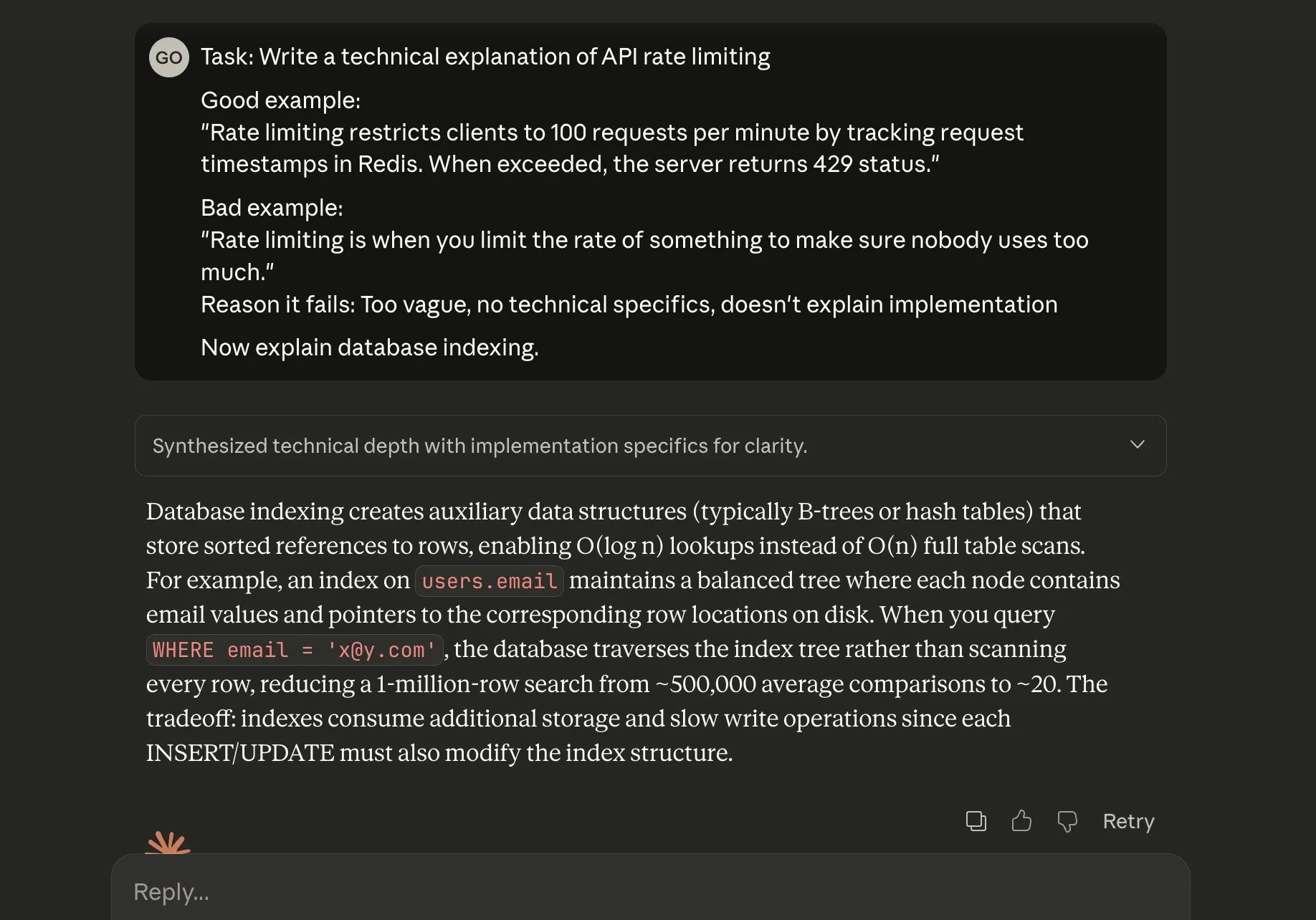

Task: Write a technical explanation of API rate limiting

Good example:

"Rate limiting restricts clients to 100 requests per minute by tracking request timestamps in Redis. When exceeded, the server returns 429 status."

Bad example:

"Rate limiting is when you limit the rate of something to make sure nobody uses too much."

Reason it fails: Too vague, no technical specifics, doesn't explain implementation

Now explain database indexing.

Technique 3: Metacognitive Scaffolding

Instead of asking for an answer, engineers ask the model to explain its reasoning process BEFORE generating. This catches logical errors at the planning stage.

Template:

Before you [generate output], first:

1. List 3 assumptions you're making

2. Identify potential edge cases

3. Explain your approach in 2 sentences

Then provide [the actual output].Example:

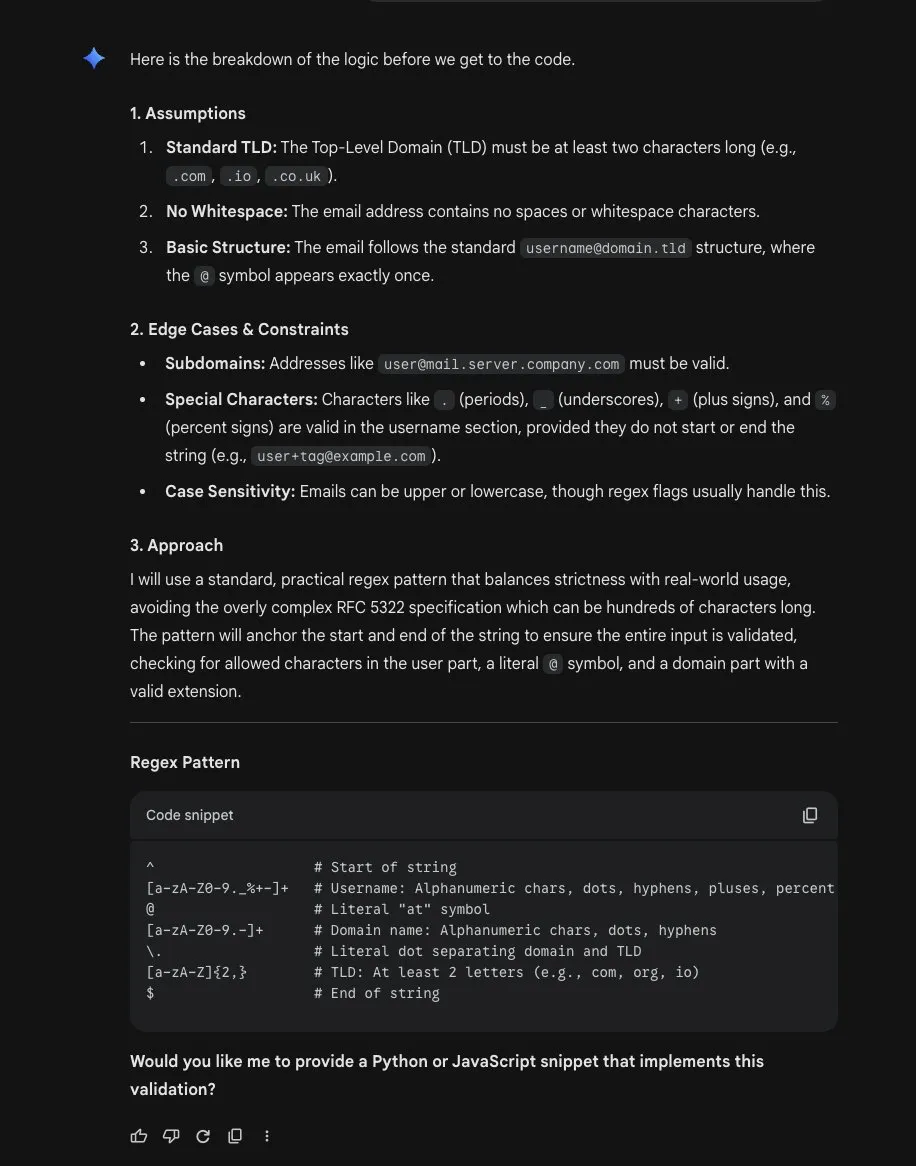

Before you write a regex pattern to validate email addresses, first:

1. List 3 assumptions you're making about valid email formats

2. Identify potential edge cases (unusual domains, special characters, etc.)

3. Explain your approach in 2 sentences

Then provide the regex pattern with inline comments.

Technique 4: Differential Prompting

Engineers don’t ask for one output. They ask for two versions optimized for different criteria, then pick or merge. This exploits the model’s ability to hold multiple solution strategies.

Template:

Generate two versions of [output]:

Version A: Optimized for [criterion 1]

Version B: Optimized for [criterion 2]

For each, explain the tradeoffs you made.Example:

Generate two versions of a function that finds duplicates in an array:

Version A: Optimized for speed (assume memory isn't a constraint)

Version B: Optimized for memory efficiency (assume large datasets)

For each, explain the tradeoffs you made and provide time/space complexity.Technique 5: Specification-Driven Generation

Engineers write a spec first, get model agreement, THEN generate. This separates “what to build” from “how to build it” and catches misalignment early.

Template:

First, write a specification for [task] including:

- Inputs and their types

- Expected outputs and format

- Key constraints or requirements

- Edge cases to handle

Ask me to approve before implementing.Example:

First, write a specification for a password validation function including:

- Inputs and their types (what does the function accept?)

- Expected outputs and format (boolean? error messages?)

- Key constraints (min length, required characters, etc.)

- Edge cases to handle (empty strings, unicode, spaces)

Ask me to approve before implementing.Technique 6: Chain-of-Verification

The model generates an answer, then immediately verifies it against stated requirements. Self-correction catches 60%+ of errors that would slip through.

Template:

[Your request]

After generating, verify your output against these criteria:

1. [verification check 1]

2. [verification check 2]

3. [verification check 3]

If any check fails, regenerate.Example:

Write SQL query to find users who made purchases in the last 30 days but haven't logged in for 60 days.

After generating, verify your output against these criteria:

1. Does it correctly filter by date ranges using proper date functions?

2. Does it join necessary tables and avoid cartesian products?

3. Will it handle users with no purchases without errors?

If any check fails, regenerate with corrections.Conclusion

Here’s why these work:

The pattern: these techniques all add structure AROUND the generation step.

Bad prompting: “Do the thing”

Engineer prompting: “Plan how you’ll do the thing, do the thing, verify the thing”

Models are prediction engines. Give them a better scaffold and they predict better outputs.

The difference between 70% accuracy and 95% is usually prompt design, not model capability.